Creating expired X.509 certificates

- May 10, 2020

- tuxotron

Last week as I was preparing a the material for a workshop about TLS for developers, for one of the exercises, I needed to create an expired certificate.

To do that I thought that when creating my certificate (self signed) all I had to do was to pass a negative number when specifying the numbers of days for the certificate validity.

So there I went and run the following command:

openssl req -x509 -newkey rsa:4096 -keyout server.key -out server.crt -days -5

req: Non-positive number "-5" for -days

req: Use -help for summary.

As you can see, that didn’t work.

After looking around for a bit, all the solutions I initially found, involved changing my system’s date and then generate the certificate. I didn’t like that option. So my next thought was to create the certificate in a Docker container. I would change the container’s date and then run my openssl command. Let’s try that:

docker run -it --rm ubuntu:18.04

root@c09ad5d2689d:/# date +%Y%m%d -s "20200101"

date: cannot set date: Operation not permitted

20200101

root@c09ad5d2689d:/#

As you can see that didn’t work either. Can you change the date from inside the container? You could, but to do that you need to run the container with higher privileges (–privileged). The reason for this is that the container shares the system’s clock, and therefore, changing the date inside the container would also change the host’s date. So back to square number 1.

I went back to look for other options and finally I found this nice tool called faketime. According to its documentation this is just a simple layer on top of libfaketime. This last one is a library that capture some system calls (time and fstats) and allows you using the LD_PRELOAD functionality to set a specific date and time for a particular application.

So going back to my quest, let’s create a certificate only valid for 1 day between the 1st and 2nd of January of 2020:

faketime '2020-01-01' openssl req -x509 -newkey rsa:4096 -keyout server.key -out server.crt -days 1

Let’s verify that our certificate has the validity period we meant to set:

openssl x509 -in server.crt -text -noout

...

Validity

Not Before: Jan 1 00:00:05 2020 GMT

Not After : Jan 2 00:00:05 2020 GMT

...

That worked!

faketime accepts different ways to set the date and time:

faketime 'last Friday 5 pm' /bin/date

faketime '2008-12-24 08:15:42' /bin/date

faketime -f '+2,5y x10,0' /bin/bash -c 'date; while true; do echo $SECONDS ; sleep 1 ; done'

...

...

You can either install faketime in your system or you can use a Docker container. If you take the container option you can do this manually or using doig:

doig -u -i mytools -t faketime

And then run your container:

docker run -it --rm mytools

From there you mess with faketime without having to worry messing up your system’s date.

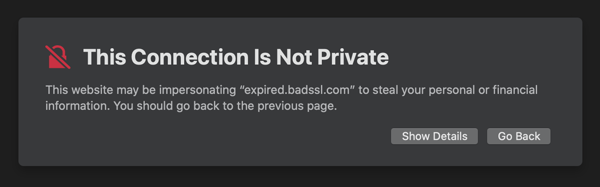

By the way, if you are looking for “certificates with issues”, https://badssl.com is a great resource to find all kind of certificates with different issues.

Docker image generator for infosec

- April 12, 2020

- tuxotron

This is a project I have entertained in my head for a while with my brother in arms Fran Ramírez, and finally I have found some time to work on it.

The idea is pretty simple. Basically it is to create a Docker image with number of tools of your choice, without having to create or know how to create a Docker image itself.

For instance, let’s say we want to run sqlmap, and we don’t have with us our lovely Kali distro, and we don’t want to install it in our system. A very convenience and clean way would be to run such tool from inside a Docker container. We spin up the container, run the tool, exit and all done. Our stays “clean”.

To do that, we would have to create a Dockerfile, with the instructions to install sqlmap, and then create the Docker image from it. Or maybe find an already existing Docker image and use it.

Docker Image Generator is a project that helps you to create Docker images without having to create a Dockerfile and build it yourself, with the tools you choose, as long as they are available in the project.

Right now, the focus of this project is infosec, therefore all the tools available are security related, but it could perfectly be extended with any other tool that can run inside a Docker container.

I have created the binaries for Linux, MacOS and Windows for your convenience, but if you prefer building the binary yourself, you will need a go version that supports modules, cloning the repository and run:

go build

Or use make

make build

Either if you have downloaded the .tgz linked above and extracted its content, or you cloned and compiled the code yourself, you should have at least: a binary called doig, a directory called tools and a file called Dockerfile.template.

If you look inside the tools directory, you can see all the tools available. Those files are simple .ini text files with some properties that defines: the name, category and the command that needs to run as part of the RUN Dockerfile instruction.

To see some examples of use, please check out the repository of the project.

The project is in an early phase and I’m working on improving the code (refactoring, testing, etc), adding new functionality and more tools. By the way, pull requests are welcome!

I would like to thank some friends: Oscar for his active collaboration, as well as: Fran, Alberto end Enrique, for their ideas and support.

Extending kubectl

- September 7, 2019

- tuxotron

- It has to be an executable (binary or script)

- It must be in your system’s PATH

- Its name must start with kubectl- (including the dash!)

As you probably already know, kubectl is the official tool to interact with Kubernetes from the command line. This tool, besides all the functionality that it already provides, allows us to extend its functionality through plugins.

A kubectl plugin is nothing but a file with the following three requirements:

The plugin system in kubectl was introduced as alpha in version 1.8.0 and it was rewritten in version 1.12.0, which is the minimum version recommended if you are going to play with this feature.

Let’s write our first plugin. It will be a bash script named kubectl-hello. This is its content:

#!/bin/bash

echo "Hello, World!"

Prefixing the file name with kubectl- is one of the requirements. Another requirement is to make it an executable:

chmod +x kubectl-hello

The last requirement is to make sure the file is somewhere in the PATH. You can either copy the file to a directory that is already in the PATH, or make the directory you created the file in, part of the PATH. In our case, we’ll take the second approach:

export PATH=$PATH:~/tmp/kplugin

This command only takes effect in the session where you run it. Once that session is closed (or opening a different terminal), your directory will no longer be part of your PATH. To make it persistent you will need to add that command to your ~/.bashrc, ~/.zshrc or something similar.

Now we can run our plugin:

kubectl hello

Hello, World!

Let’s create another plugin, a little bit more useful this time. In this case will also be a bash script. This plugin will generate a configuration file with the given account’s credentials (in this case a service account). This is pretty handy when you need to interact with a Kubernetes cluster from, let’s say your CI/CD server. In order to get your server access to the cluster, you will need to provide some type of credential. So you can use this plugin to create the configuration file based on a service account with the necessary credentials and cluster information.

Our file will have the following content:

#!/bin/bash

set -e

usage="

USAGE:

kubectl kubeconf-generator -a SERVICE_ACCOUNT -n NAMESPACE

"

while getopts a:n: option

do

case "${option}"

in

a) SA=${OPTARG};;

n) NAMESPACE=${OPTARG};;

esac

done

[[ -z "$SA" ]] && { echo "Service account is required" ; echo "$usage" ; exit 1; }

[[ -z "$NAMESPACE" ]] && { echo "Namespace is required" ; echo "$usage" ; exit 1; }

# Get secret name

SECRET_NAME=($(kubectl get sa $SA -n $NAMESPACE -o jsonpath='{.secrets[0].name}'))

# Get secret value

SECRET=$(kubectl get secret $SECRET_NAME -n $NAMESPACE -o jsonpath='{.data.token}' | base64 -D)

# Get cluster server name

SERVER=$(kubectl config view --minify -o json | jq -r '.clusters[].cluster.server')

# Get cluster name

CLUSTER_NAME=$(kubectl config view --minify -o json | jq -r '.clusters[].name')

# Get cluster certs

CERTS=$(kubectl config view --raw --minify -o json | jq -r '.clusters[].cluster."certificate-authority-data"')

cat << EOM

apiVersion: v1

kind: Config

users:

- name: $SA

user:

token: $SECRET

clusters:

- cluster:

certificate-authority-data: $CERTS

server: $SERVER

name: $CLUSTER_NAME

contexts:

- context:

cluster: $CLUSTER_NAME

user: $SAS

name: svcs-acct-context

current-context: svcs-acct-context

EOM

As you can see, this plugin, besides kubectl also uses jp. So to make it work, you need to have such tool installed as well.

The plugin expects two parameters: the service account and the namespace for such account.

Let’s dump the content listed above into a file named kubectl-kubeconf_generator. Pay attention to the underscore character. We’ll come back to this later.

Let’s run our plugin without any parameters:

kubectl kubeconf_generator

Service account is required

USAGE:

kubectl-kubeconf_generator -a SERVICE_ACCOUNT -n NAMESPACE

Now let’s call it with the service account (default) and namespace (default). Now this time the plugin will try to fetch some information from the cluster defined in your current context. In this case I’m using minikube (if you are too, make sure it is up and running):

kubectl kubeconf_generator -a default -n default

apiVersion: v1

kind: Config

users:

- name: default

user:

token: REDACTADO

clusters:

- cluster:

certificate-authority-data: null

server: https://192.168.99.110:8443

name: minikube

contexts:

- context:

cluster: minikube

user:

name: svcs-acct-context

current-context: svcs-acct-context

The output is a configuration file we could use to provide access to our minikube using the default service account from the default namespace (with whatever permissions granted to that account).

Let’s go back to the name of the file. Remember, in our case we named our file as kubectl-kubeconf_generator. When kubectl sees an underscore character in the plugin name, it will allow us to call the plugin either with the underscore or a dash:

kubectl kubeconf_generator -a default -n default

...

o kubectl kubeconf-generator -a default -n default …

Now, if you rename our file to kubectl-kubeconf-generator (replace the underscore with a dash), kubectl recognizes as a command and subcommand. So to call it this time, we will have to have a blank space between kubeconf and generator:

kubectl kubeconf generator -a default -n default

...

Following this pattern we can create subcommands for our plugin command. For instance, imagine we want to be able to generate two different output formats: yaml and json. We could create two files:

kubectl-kubeconf-generator-yaml

kubectl-kubeconf-generator-json

This way to invoke them:

kubectl kubeconf generator json ...

...

kubectl kubeconf generator yaml ...

...

This example is not the best way to customize the output. For that you may want to use a parameter, in this case probably -o to align with kubectl “standards”.

To wrap up, it is worth to mention that you can’t override an existing kubectl command. For instance, kubectl provides the version command, so if you create a new plugin and named kubectl-version, when you call kubectl version, the internal version command will be called and not your plugin. Also there are some rules about name conflicts, when you have two plugins with the same name in different directories, etc, but I’m not going to touch on those rules in this entry. You can always consult the official documentation linked at the beginning of this post.

Last but not least, I just want to mention that kubectl has a plugin command with the list option which will show us the kubectl plugins in our system:

kubectl plugin list

The following compatible plugins are available:

/Users/tuxotron/tmp/kplugin/kubectl-hello

/Users/tuxotron/tmp/kplugin/kubectl-kubeconf-generator

In a future entry I will talk about a cleaner way to handle and install plugins.

Search

Recent

- Creating expired X.509 certificates

- Posts

- Docker image generator for infosec

- Extending kubectl

- How kubectl uses Kubernetes API

- Moving the blog images from Flickr to Digital Ocean Spaces

- Controlling your garage doors with an ESP32 - Part 3

- Controlling your garage doors with an ESP32 - Part 2

- Controlling your garage doors with an ESP32 - Part 1

- Size matters, but it is not all about the size